Breaking the bias cycle: why your AI hiring tools are failing, and how to fix.

Article created in collaboration with Marie Chaproniere, Founder of Behind the mask community

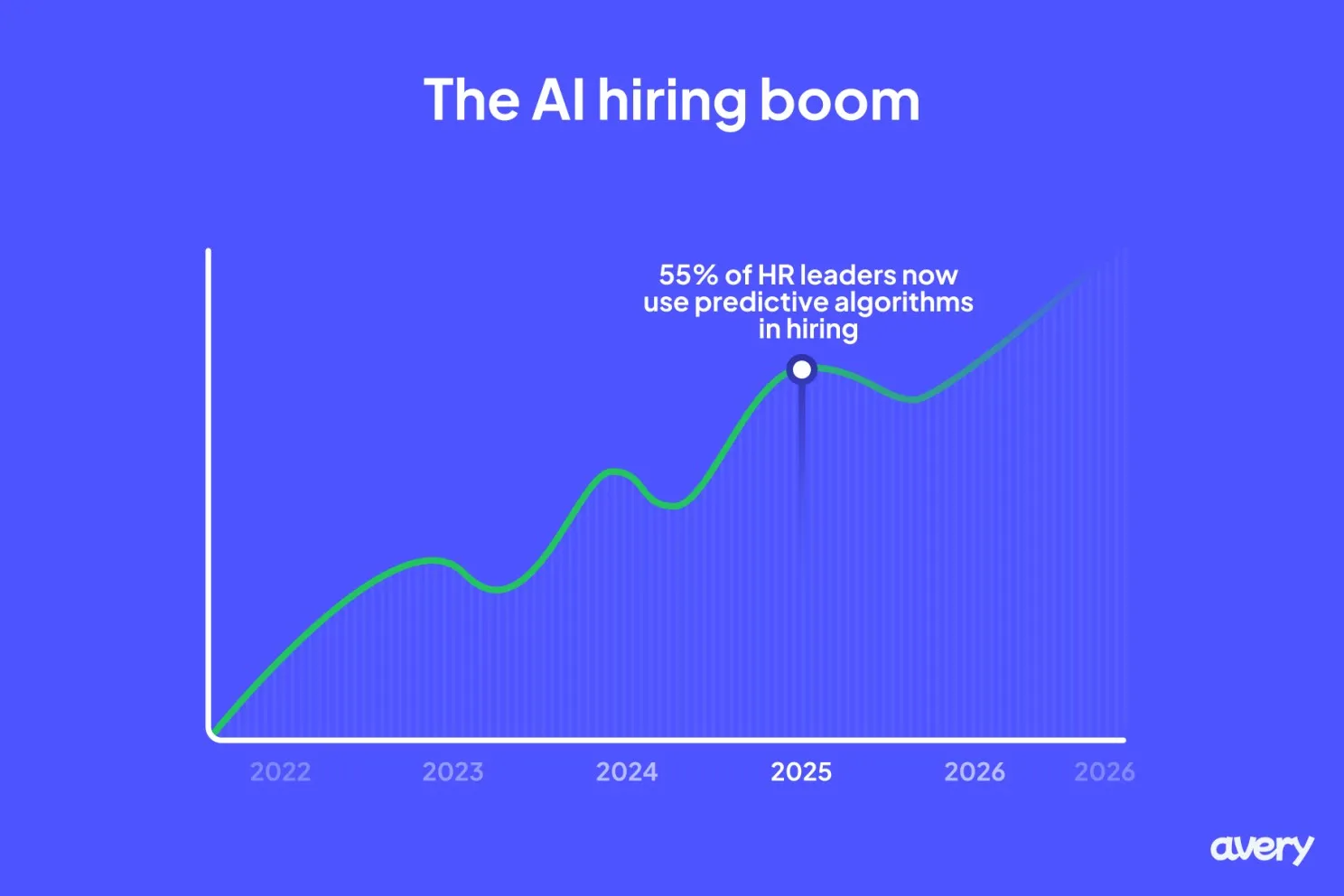

Here's what 55% of HR leaders using AI in hiring wish they'd known from the start.

We've all heard the horror stories. Amazon's AI hiring tool that systematically rejected women. Recruitment algorithms that penalized career gaps, coincidentally screening out the very diversity we desperately need. Yet here we are, with 55% of HR leaders now using predictive algorithms in hiring, often creating new modes of discrimination rather than eliminating existing biases.

The uncomfortable truth? Most organizations are implementing AI hiring tools without asking the fundamental questions that could prevent these failures.

The real problem isn't the technology, it's our approach

"I've always led this way and built Talent Acquisition processes with fairness and humans at the center and 'AI fairness auditing' is simply an evolution to that work" says Marie Chaproniere, Founder of Behind The Mask Community and a Talent Acquisition leader with 15 years of experience across startups and large tech organizations, where she's led global teams and built systems from scratch. "Every hiring decision when implementing new tools, it's always important to determine the blockers and biases. I've been advocating for hiring with intention for as long as I can remember".

Marie's perspective cuts through the noise of technical jargon to focus on what really matters: building processes that put fairness and humans at the center, not as an afterthought.

But here's where most organizations get it wrong. They rush to implement the latest AI tools without ensuring their foundational processes aren't already broken.

"Like with any new adaption we have to make sure we have the basics down, our processes are not broken before we start jumping on every latest tool that hits that market just to try to keep up, because when we do that we're hugely at risk of lack of transparency and added bias," Marie explains.

This reactive approach is exactly what research shows is happening across the industry. Organizations are backfilling solutions based on assumptions rather than building intentional, fair processes from the ground up.

The misconceptions that are sabotaging your success

Marie identifies the most dangerous misconception plaguing HR teams:

"Obviously the first one is that AI will take your job. But, I think we're all bored of that narrative. The second is that AI will solve your problems but the reality is most of the time you're not sure where AI can truly add value and what problems you're trying to solve with it."

This hits at the heart of why so many AI hiring initiatives fail. Without clear problem definition, organizations end up with sophisticated tools that amplify existing biases rather than addressing them.

The regulatory landscape is catching up to this reality. New York City's Local Law 144 now requires annual bias audits for automated hiring tools, and the EU's AI Act classifies hiring-related systems as high-risk. These aren't bureaucratic hurdles, they're recognition that the stakes are too high to leave AI hiring unchecked.

Where the rubber meets the road: real audit discoveries

Marie's experience reveals how AI bias often mirrors human bias in unsettling ways. "The easiest examples are scarily similar to human biases. Not passing candidates that had too long of a career break or too many gaps in their resume which of course completely overlooks skills and experience, is a huge red flag and leads to even more lack of diversity across the process entirely. This led to a huge drop in our pass through rate, particularly with women."

The solution?

"We were earlier stage adopters to AI and at this point, the best we could do was acknowledge this and reintroduce a human review to this step of the process", a reminder that AI augmentation, not replacement, often yields the best outcomes.

Another revealing example emerged during technical assessments. "In tech we're often looking to balance our talent pool with more women in the pipeline, and we noticed that during our technical assessment more women would drop off and this gave us an opportunity to explore and dive into the why and recalibrate and improve this." This type of pattern recognition is exactly what systematic bias auditing can uncover before it becomes a persistent problem.

The framework that actually works

When it comes to implementing bias audits, Marie emphasizes that planning is foundational. "The planning for sure, this has to be foundational. I'd love to dive straight into the data and start from there, but really simply we're just reacting to what we see, rather than what we need. We end up just backfilling based on our assumptions and we'll have to end up pivoting anyway and spending more time justifying your next move. You can then move on to auditing and testing for fairness etc."

This aligns with emerging best practices in AI audit methodology. The most effective frameworks begin with comprehensive planning that includes stakeholder engagement, risk assessment, and clear objectives before diving into technical analysis.

But Marie keeps it practical:

"Honestly, I've always tried to not overwhelm our teams. All we want to know out of the metrics is who we are hiring, who isn't getting screened and why. I try to not get too bogged down in the technicalities, but ask the stuff that makes sense to us like: are our processes fair, are all groups making it through the process and is there a pattern."

This approach bridges the gap between complex fairness metrics like demographic parity and equal opportunity, and the actionable insights HR teams actually need.

Getting buy-in: speaking everyone's language

One of the biggest challenges in bias auditing isn't technical, it's organizational. Marie's strategy for engaging HR and legal teams is straightforward:

"People listen when you speak their language. Especially when auditing around fairness, it's good to state the impact directly, to both HR, anything that exposes risk to the business, reputation, and has a human impact is easily translated."

This communication strategy becomes even more critical as organizations navigate emerging regulations. The cost of non-compliance isn't just financial, it's reputational damage and legal liability that can persist long after the initial violation.

Your starting point: 3 actions you can take today

For HR leaders ready to start improving fairness immediately, Marie offers three concrete recommendations:

- Use your ATS to dig into the data: "Absolutely use your ATS, dig into the data - where in your process are you seeing pass through drops." This simple analysis can reveal bias patterns before they become systemic problems.

- Start gathering real-time feedback: "Start in real time, gathering the feedback from your candidate experience surveys, from your interview notes and those you've onboarded - where are you fair and equitable in the process." This human-centered approach catches biases that purely quantitative analysis might miss.

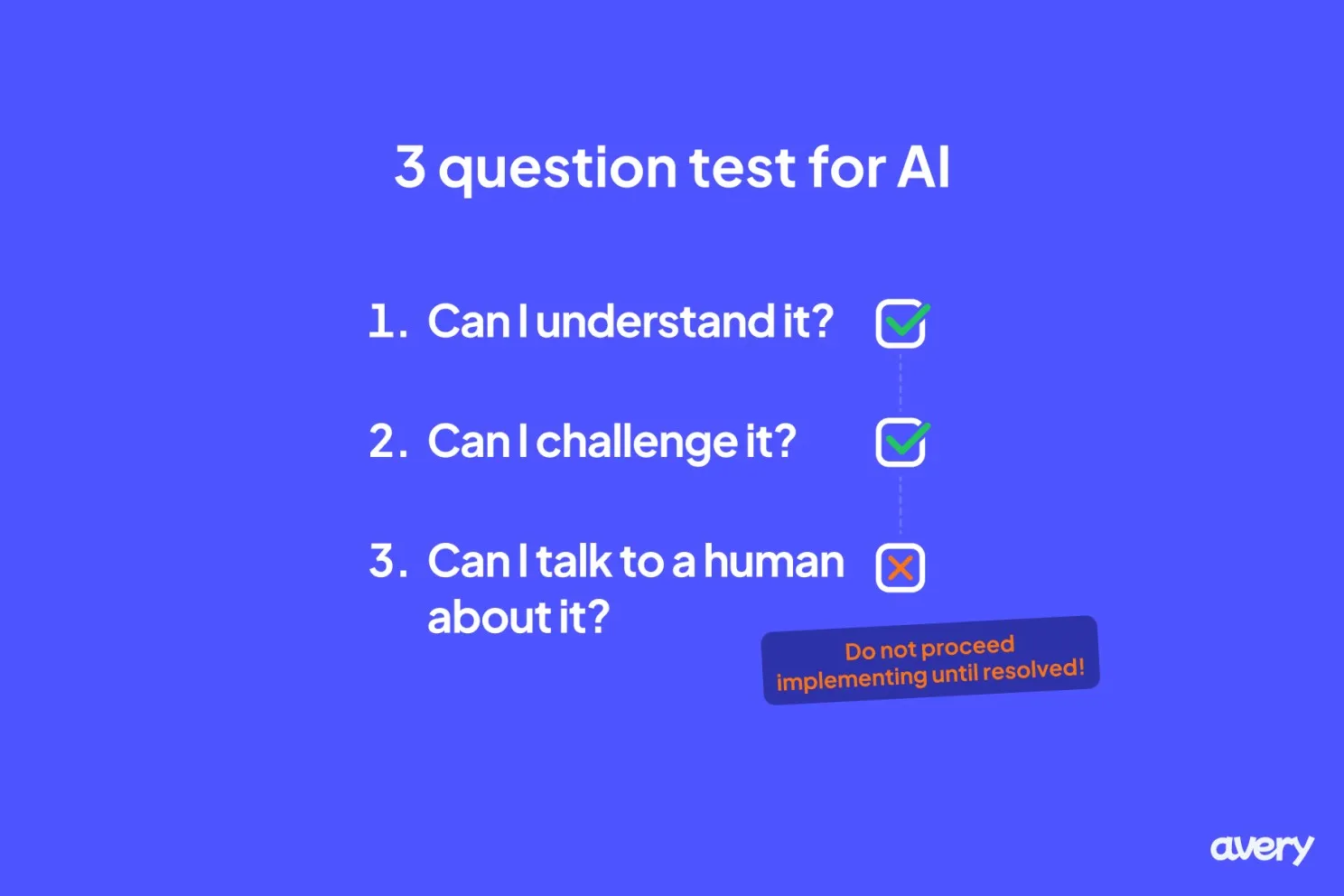

- Apply the 3-question test to any AI tool: "Can I understand it? Can I challenge it? Can I talk to a human about it?" If you can't answer yes to all three, you're not ready to implement that tool responsibly.

The technology question: what really matters

Rather than getting caught up in specific platform recommendations, Marie focuses on fundamental capabilities:

"Oef - most TA teams are mostly likely working with what they have and what's already built. Our role here is to figure out whether it can do the thing we need it to do."

This pragmatic approach acknowledges that the perfect tool doesn't exist, the key is ensuring whatever you use can be understood, challenged, and improved.

Looking forward: the regulatory wave is coming

Marie sees regulation as inevitable:

"Regulation is definitely on its way for sure, we see it globally, and it'll soon be a bigger focus in Europe. I think a lot of organisations are already being cautious but, we don't know to what extent yet. (at least for me)".

But she's more concerned about whether organizations are asking the right questions: "With tech tooling and AI evolving as quickly as it is, I wonder if our leaders are already asking the right questions or whether they're being avoided. I'm curious to see this development, not just how we're designing, but who we're designing with and for." This touches on a critical point: the technical capability to audit for bias is outpacing organizational readiness to act on the findings. The most sophisticated bias detection is meaningless without the commitment to make changes based on what you discover.

The unconventional advice that changes everything

Marie's final piece of advice might surprise you:

"Go wild with experimenting".

"I really feel like the time is now. It's an exciting time, a lot of people are coming through the proof of concept phase, and are starting to experiment and I see already a shift in how we're going to use this to lead, and build the future of talent. Using AI can be uncomfortable if you don't know how, and you can feel as though you're going to get left behind. So, let's sit with discomfort, ask awkward questions, make mistakes and break things, because otherwise you'll miss the boat."

This isn't reckless advice, it's recognition that the window for shaping ethical AI hiring practices is now. The organizations that experiment thoughtfully, with proper safeguards and continuous monitoring, will be the ones that get it right.

The bottom line

AI bias auditing isn't just about compliance, it's about building hiring processes that actually work. The organizations succeeding in this space aren't necessarily the ones with the most sophisticated technology. They're the ones asking the right questions, planning systematically, and committing to continuous improvement.

As Marie puts it, AI fairness auditing is "simply an evolution" of building fair hiring processes. The tools may be new, but the fundamental principles remain the same: transparency, accountability, and putting humans at the center of every decision.

The question isn't whether you need to audit your AI hiring tools, it's whether you can afford not to.